1/ I& #39;m often confronted with skepticism that neural network models of the brain are intelligible, or that they& #39;re even proper models at all, considering how "different they look" from real brains.

2/ Sometimes the best approach to a tough science question is to step back and take a philosophical viewpoint. Together with my amazing colleague @luosha (an actual professional philosopher), that& #39;s what we& #39;ve done in two new companion papers.

3/ Part I ( https://arxiv.org/abs/2104.01490 )">https://arxiv.org/abs/2104.... is about mechanisms. Philosophers have argued that mechanistic models should map to a explanandum& #39;s parts, mirror their internal organization, and reproduce their activities. Do NN models fit this model-mechanism-mapping ("3M") framework?

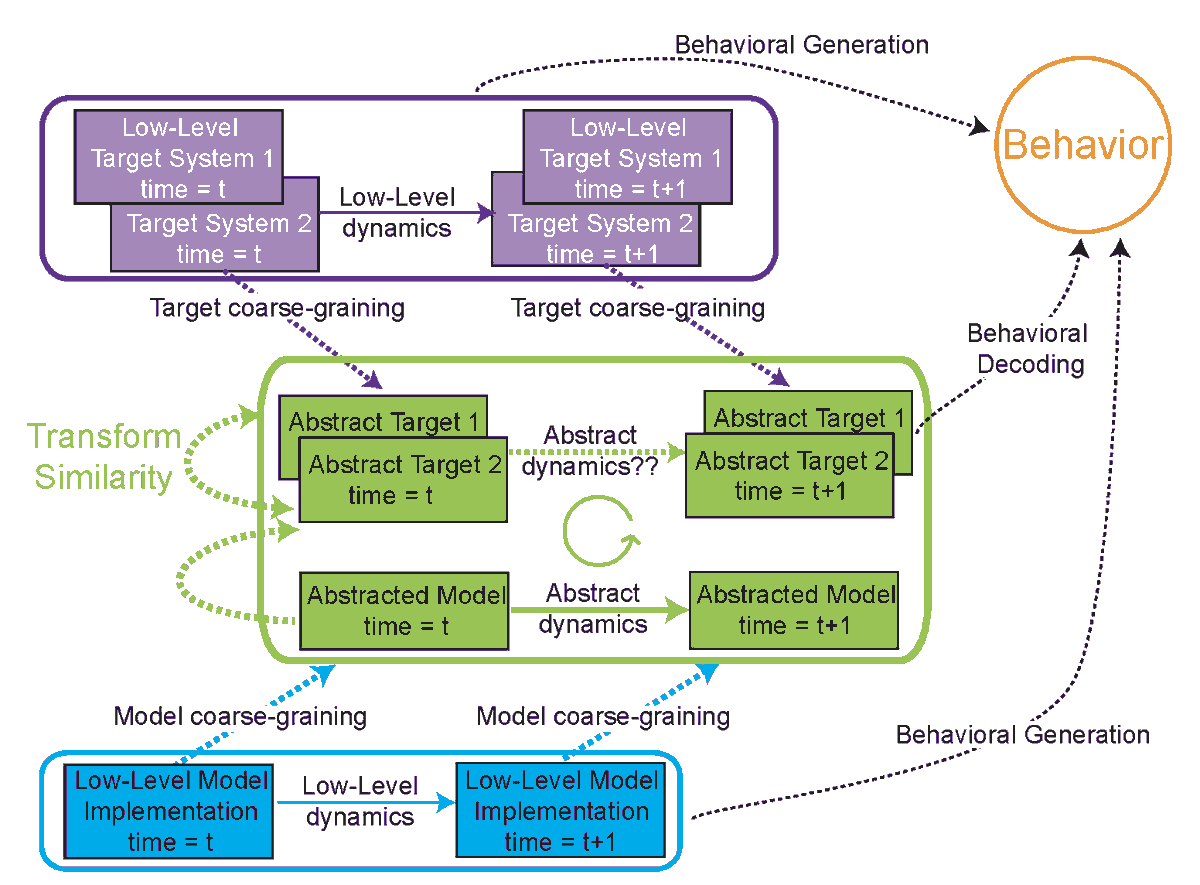

4/ We argue that NNs can be meaningfully mechanistic models -- given some innovations in understanding "mapping". We propose "3M++", an expanded concept for how to assess brain model that makes explicit the role of abstraction in both computational and experimental neuroscience.

6/ First, NN models require some amount of abstraction, and shouldn& #39;t be forced to use the most detailed possible level of description. But the abstraction must be sufficiently fine-grained to produce key behaviors of target system. Too coarse an abstraction won& #39;t be "runnable".

7/ Second, no two individuals& #39; brains are identical. Whatever mapping transform is needed to judge a given brain area as "similar" between animals, but different from other brain areas within any single animal -- that is what we should use to map models to brain data.

8/ With these two concepts (runnability and transform similarity) in mind, we show that some Neural Network models are actually pretty good as mechanistic models of certain brain systems.

9/ Of course, NN models are far from perfect. But we think 3M++ framework’s concepts will transcend the specifics of today& #39;s NN, and helpfully apply to improved model classes of the future (with different and perhaps better, abstractions.

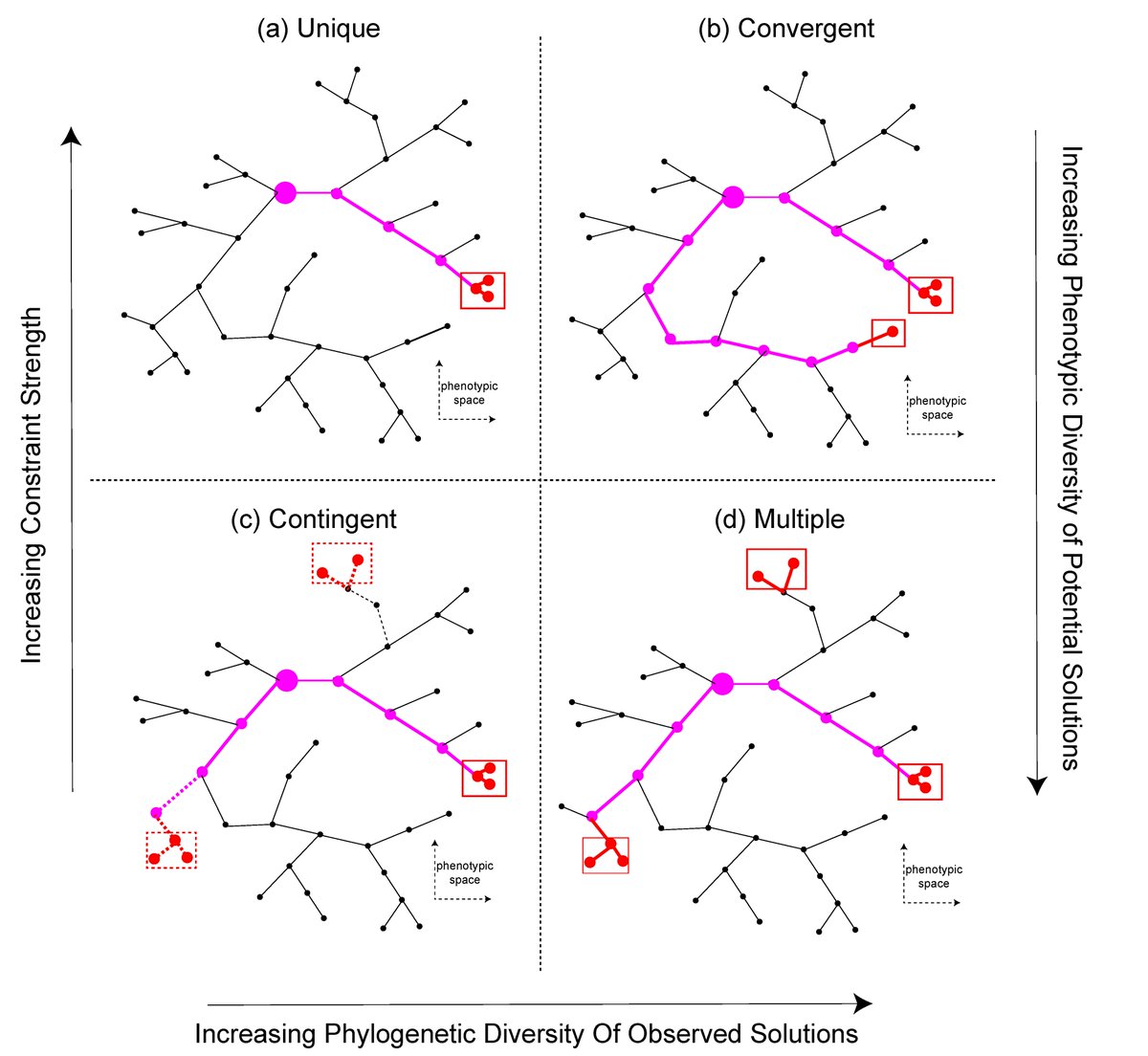

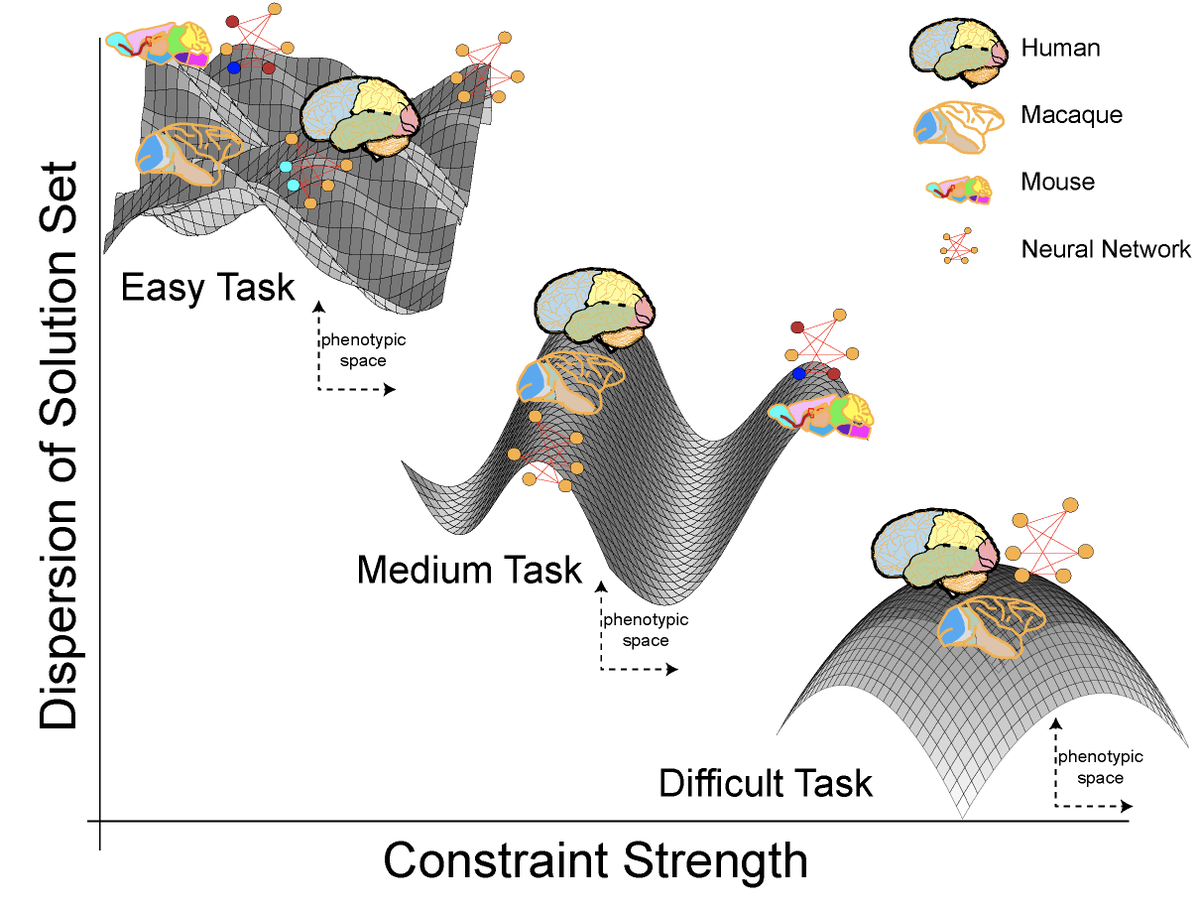

10/ Part II ( https://arxiv.org/abs/2104.01489 )">https://arxiv.org/abs/2104.... is about intelligibility. We argue that in certain circumstances deep NN models are intelligible (and not just "black boxes") - when intelligibility is understood in evolutionary constraint-based terms.

11/ We argue that task-optimized NN models that optimize for a meaningful high-level goal, and which have good neural predictivity as a by-product, are "intelligble" -- or at least, more intelligible than models which need to be fit to the neural data directly.

12/ When task-optimized NN models match neural data, this is probably not a miracle: it is likely because the constraints on both the model and the real organism have lead to convergent evolution.

Read on Twitter

Read on Twitter