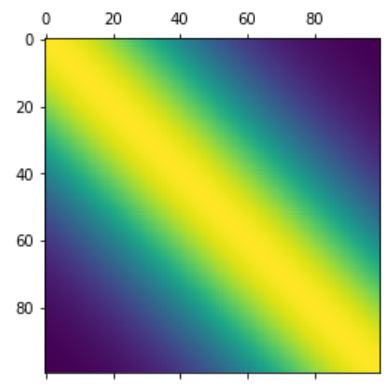

1/ I& #39;ve taken positional embedding in transformers for granted, but now looking at it: is the main reason for the unnatural sin/cos formulation the "nice" autocovariance structure? NLP twitter help me out! @srush_nlp @colinraffel @ilyasut

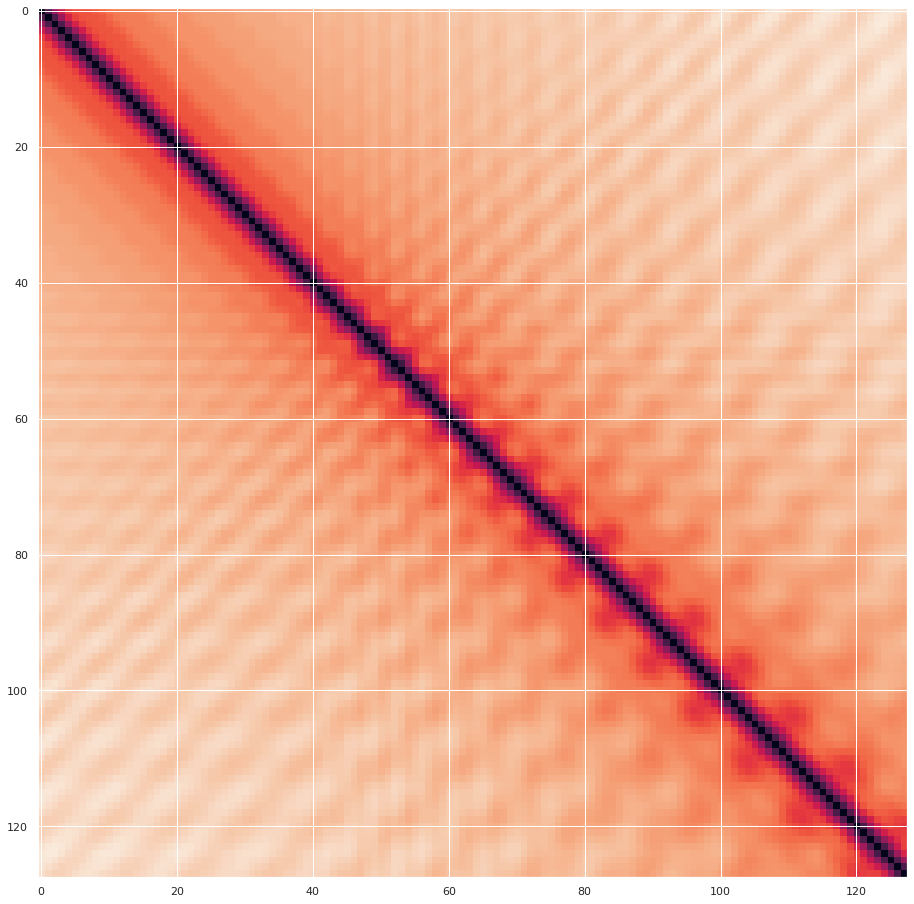

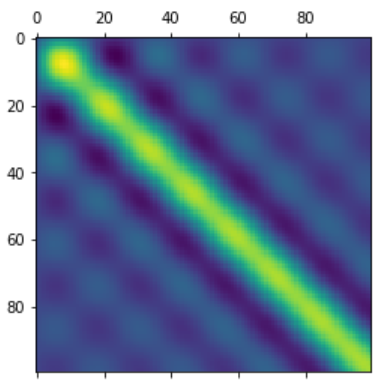

2/ I could also sample each coordinate slice (total of d_model slices) from a 1D Gaussian process w/ kernel, say K(x, y) = e^{-(x-y)^2}, so that ith positional embedding vector is ith position in all d_model GP samples. This would give a nicer autocovariance. Anyone tried this?

Read on Twitter

Read on Twitter